libxul.so startup coverage

During the work leading to my previous post, I used a modified version of icegrind and a modified version of Taras' systemtap script to gather data about vfs_read/vfs_write and file mapping induced I/O. The latter was useful to create the graphs in that previous post.

Using both simultaneously, I could get some interesting figures, as well as some surprises.

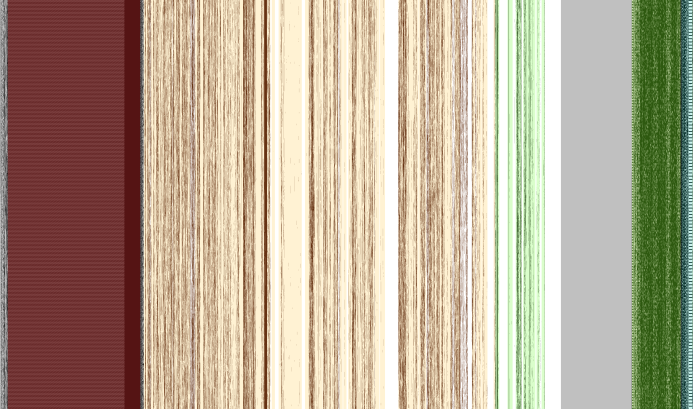

The above graph represents kernel readaheads on libxul.so, vs. the actual accesses to that file. Each pixel on the image (click to zoom in) represents a single byte in libxul.so. Each vertical line thus represents a page worth of data (4096 bytes). Pixel luminosity gives information about how the given byte in libxul.so was reached:

- White: never accessed

- Light colour: kernel readahead as seen by a systemtap

__do_page_cache_readaheadprobe - Dark colour: actual read or write as spotted by icegrind

The most important ELF sections are highlighted with the same colour scheme as in the previous graphs.

The interesting part here is the following figures:

- Excluding

.eh_frame, 22,248,432 bytes are readahead by the kernel, out of which 9,764,529 bytes are actually accessed (44%) - 5,437,560 bytes are readahead from the

.rela.dynsection, out of 5,437,560 (100%), while 4,640,040 are actually accessed (85%) - 12,134,040 bytes are readahead from the

.textsection, out of 14,419,608 (84%), while 2,771,736 are actually accessed (19%) - 1,574,224 bytes are readahead from the

.rodatasection, out of 2,037,072 (77%), while 217,338 are actually accessed (11%) - 2,001,872 bytes are readahead from the

.data.rel.rosection, out of 2,001,872 (100%), while 1,689,318 are actually accessed (84%) - 469,984 bytes are readahead from the

.datasection, out of 469,984 (100%), while 256,319 are actually accessed (55%)

The actual use numbers would be better at function and data structures level instead of instruction and data byte level, but they already give a good insight: there is a lot to gain from both function and data reordering. Also, it appears relocation sections account for more than 25% of all the libxul.so reads on x86-64. This proportion is most certainly not as important on x86 because each relocation is at most half the size of an x86-64 relocation.

Knowing the above is obviously nothing new, but like with the previous data, its usefulness comes from gathering new data after various experiments and comparing.

Unfortunately, as can be seen when zooming in, these figures aren't entirely accurate: there are many actual accesses in places where the systemtap script didn't catch kernel readaheads. This means the kernel does read more than what is accounted above, thus making the actual use percentage appear higher than it is (but probably only slightly). I don't know why some accesses in mmap()ed memory either don't trigger a __do_page_cache_readahead or trigger an actual readahead longer than what requested to __do_page_cache_readahead. Understanding this is important to have a better grasp at what the kernel really does during the library initialization. This is why I hadn't included the read length in the previous graphs.

Gathering similar data for OSX and Windows would be pretty interesting.

Update: I found the unaccounted page readaheads, but there are still a few pages that are not being read this way. I found a better probe point for both accounting, so I will come back with updated figures in an upcoming post.

2010-10-04 19:38:22+0900

You can leave a response, or trackback from your own site.